Where I left the discussion there was a question mark. What does conformity mean when constant change is part of the way an aircraft system works?

It’s reasonable to say – that’s nothing new. Every time, I boot up this computer it will go through a series of states that can be different from any that it has been through before. Cumulative operating system updates are regularly installed. I depend on the configuration management practices of the Original Equipment Manufacturer (OEM). That’s the way it is with aviation too. The more safety critical the aircraft system the more rigorous the configuration management processes.

Here comes the – yes, but. Classical complex systems are open to verification and validation. They can be decomposed and reconstructed and shown to be in conformance with a specification.

Now, we are going beyond that situation where levels of complexity prohibit deconstruction. Often, we are stuck with viewing a system as a “black box”[1]. This is because the internal workings of a system are opaque or “black.” This abstraction is not new. The treatment of engineered systems as black boxes dates from the 1960s. However, this has not been the approach used for safety critical systems. Conformity to an approved design remains at the core of our current safety processes.

It’s as well to take an example to illustrate where a change in thinking is needed. In many ways the automotive industry is already wrestling with these issues. Hands free motoring means that a car takes over from a driver and act as a driver does. A vehicle may be semi or fully autonomous. Vehicles use image processing technologies that take vast amounts of data from multiple sensors and mix it up in a “black box” to arrive at the control outputs needed to safely drive.

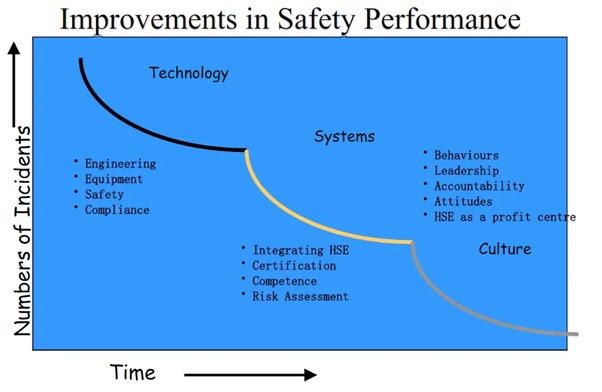

Neural networking or heuristic algorithms may be the tools used to make sense of a vast amount of constantly changing real world data. The machine learns as it goes. As technology advances, particularly in machine learning ability, it becomes harder and harder to say that a vehicle system will always conform to an understandable set of rules. Although my example is automotive the same challenges are faced by aviation.

There’s a tendance to see such issues as over the horizon. They are not. Whereas the research, design and development communities are up to speed there are large parts of the aviation community that are not ready for a step beyond inspection and conformity checking in the time honoured way.

Yes, Airworthiness is alive and kicking. As a subject, it now must head into unfamiliar territory. Assumptions held and reinforced over decades must be revisited. Checking conformity to an approved design may no longer be sufficient to assure safety.

There are more questions than answers but a lot of smart people seeking answers.

POST 1: Explainability is going to be one of the answers – I’m sure. Explained: How to tell if artificial intelligence is working the way we want it to | MIT News | Massachusetts Institute of Technology

POST 2: Legislation, known as the Artificial Intelligence Act ‘Risks posed by AI are real’: EU moves to beat the algorithms that ruin lives | Artificial intelligence (AI) | The Guardian

POST 3: The world of the smart phone and the cockpit are here How HUE Shaped the Groundbreaking Honeywell Anthem Cockpit

[1] In science, computing, and engineering, a black box is a device, system, or object which produces useful information without revealing information about its internal workings.