My daily routine once comprised of walking across a bridge over the Rhine to an office in Ottoplatz in Köln-Deutz[1]. That’s in Cologne, Germany on the eastern side of the river.

In the square outside the railway station is a small monument to a man called Otto. A small monument marking a massive transformation that took place in the way transport has been powered for well over than a century. This monument honours Nicolaus August Otto who created the world’s first viable four-stroke engine in 1876.

Today, the internal combustion engine hasn’t been banished. At least, not yet and Otto could never have known the contribution his invention would make to our current climate crisis. But now, rapid change is underway in all aspect of transport. It’s just as radical as the impact of Otto’s engine.

As the electrification of road transport gathers apace so does the electrification of flying. That transformation opens new opportunities. Ideas that have been much explored in SiFi movies now become practically achievable[2]. This is not the 23rd Century. This is the 21st Century. Fascinating as it is that in The Fifth Element the flying taxi that is a key part of the story, has a driver. So, will all flying cars of the future have drivers?

I think we know the answer to that already. No, they will not. Well, initially most of the electric vehicles that are under design and development propose that a pilot (driver) will be present. Some have been adventurous enough to suggest skipping that part of the transition into operational service. Certainly, the computing capability exists to make fully autonomous vehicles.

The bigger question is: will the travelling public accept to fly on a pilotless vehicle? Two concerns come up in recent studies[3][4]. Neither should be a surprise. One concerns passengers and the other concerns the communities that will see flying taxies every day of the week.

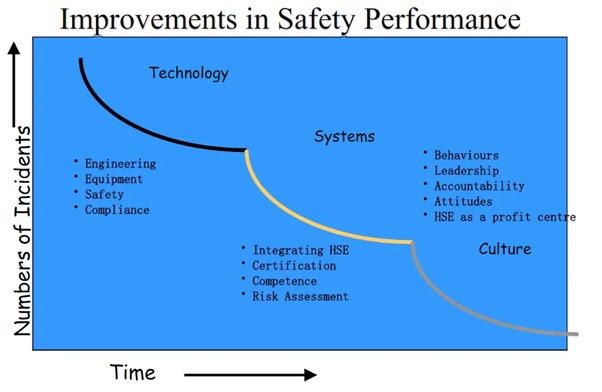

Public and passenger safety is the number one concern. I know that’s easy to say and seems so obvious, but studies have show that people tend to take safety for granted. As if this will happen de-facto because people assume the authorities will not let air taxies fly if they are unsafe.

The other major factor is noise. This historically has prevented commercial public transport helicopter businesses taking-off. Strong objections come from neighbourhoods effected by aircraft constantly flying overhead. Occasional noise maybe acceptable but everyday operations, unless below strict thresholds, can provoke strong objections.

So, would you step into an air taxi with no pilot? People I have asked this question often react quickly with a firm – No. Then, after a conversation the answer softens to a – Maybe.

[1] https://www.ksta.de/koeln/innenstadt/ottoplatz-in-koeln-deutz-eroeffnet–das-muss-nicht-gruen-sein–2253900?cb=1665388649599&

[2] https://www.imdb.com/title/tt0119116/

[3] https://www.easa.europa.eu/en/newsroom-and-events/press-releases/easa-publishes-results-first-eu-study-citizens-acceptance-urban

[4] https://verticalmag.com/news/nasa-public-awareness-acceptance-of-aam-is-a-big-challenge/